Whether you’re just starting an ecommerce business or considering a rebrand, one of the most…

How Long Should You Run Your A/B Test?

No matter what type of business you have, A/B testing can be a great way to generate more engagement and revenue from your email. The idea behind A/B testing is simple: send 2 different versions of an email campaign and find out how modest changes—like subject line, from name, content, or sending time—can have a big impact on your results.

Our research has shown that not only do A/B-tested campaigns lead to much better open and click rates than regular campaigns, they typically yield more revenue, too.

But not all A/B tests are created equal. The length of the test and the way you determine a winner play key roles in a test’s overall effectiveness.

Test what you’re trying to convert

Before you set up an A/B test, it’s important to decide the goal—and the intended outcome—of your campaign. There are plenty of possible reasons to choose one winning metric over another, but these 3 scenarios can give you an idea of how to pick a winner based on your goals:

- Drive traffic to your site. Perhaps you run a website or blog that generates revenue by hosting ads. In this type of situation, your winning metric should be clicks.

- Have subscribers read your email. Maybe you’re sending a newsletter that contains ads that pay out by the impression, or you’re simply disseminating information. In those instances, you should use opens to decide the winning email.

- Sell stuff from your connected store. If you’re using email to promote your newest and best-selling products or you’re testing different incentives to encourage shoppers to buy, you should use revenue as the winning metric.

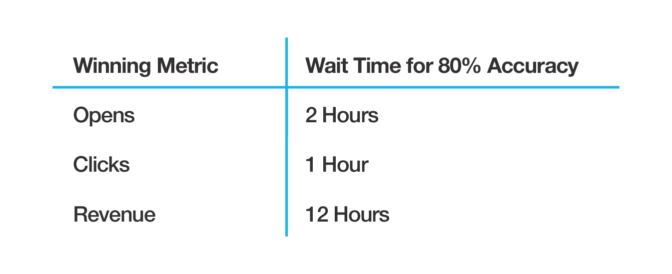

Why does this matter? The table below shows the amount of time you should wait for each testing metric before you’ll be confident in the outcome, based on our research.

You’ll notice the optimal times are quite different for each metric, and we don’t want you to waste your time or choose a winner too soon! Now, let’s dig into the data to take a closer look at how we came up with our suggested wait times—and see why it’s so important to use the right winning metrics.

Clicks and opens don’t equal revenue

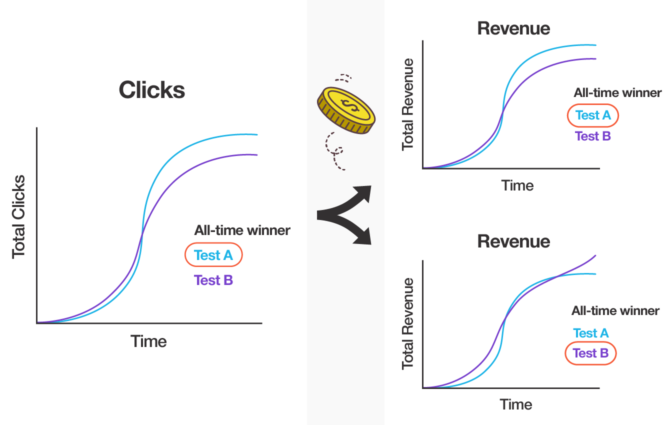

Since it takes longer to confidently determine a winner when you’re testing for revenue, you might be tempted to test for opens or clicks as a stand-in for revenue.

Unfortunately, we found that opens and clicks don’t predict revenue any better than a coin flip!

Even if one of the tests clearly emerges with a higher click rate, for example, you are as likely to select the test that generates more revenue as you are the test that generates less revenue, if you choose the winner based on clicks. It’s a similar story when trying to use open rates to predict the best revenue outcome. So, if it’s revenue you’re after, it’s best to take the extra time and test for it.

How long should you wait?

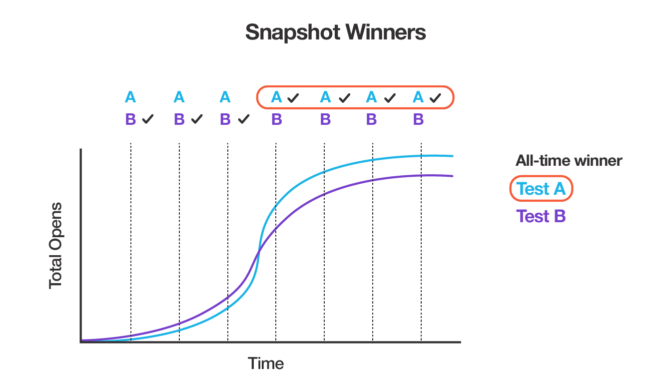

We looked at almost 500,000 of our users’ A/B tests that had our recommended 5,000 subscribers per test to determine the best wait time for each winning metric (clicks, opens, and revenue). For each test, we took snapshots at different times and compared the winner at the time of the snapshot with the test’s all-time winner.

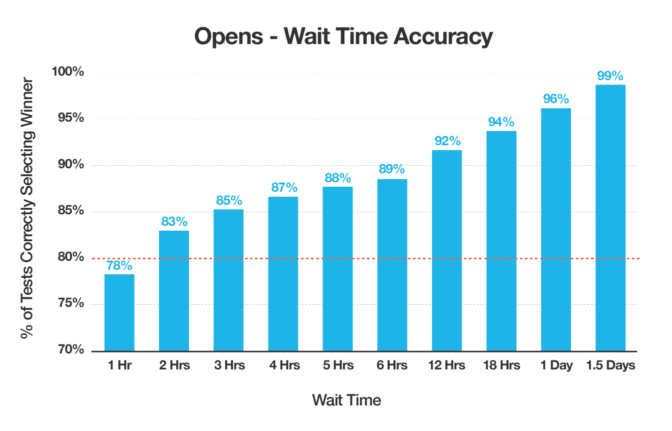

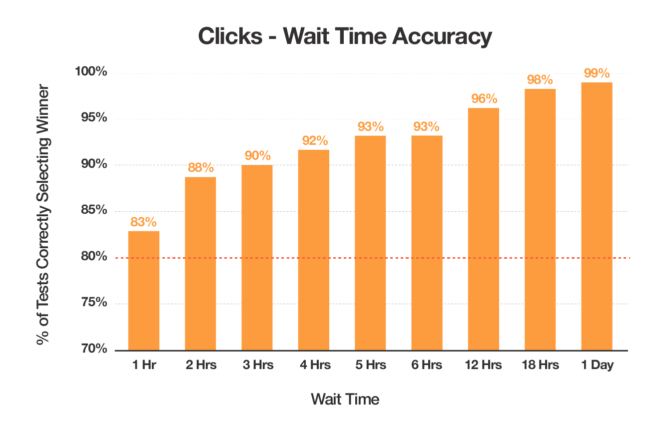

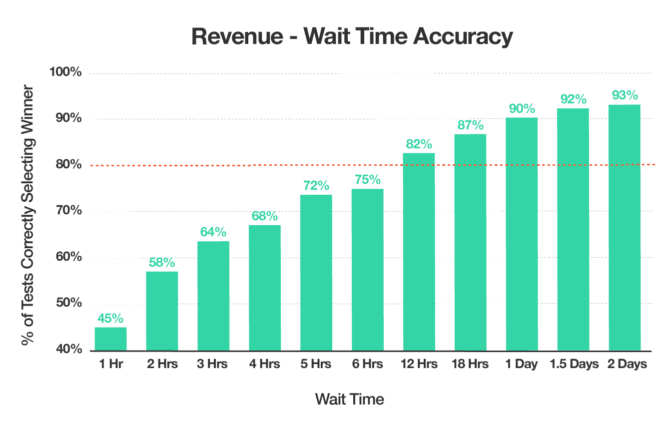

For each snapshot, we calculated the percentage of tests that correctly predicted the all-time winner. Here’s how the results shook out.

For opens, we found that wait times of 2 hours correctly predicted the all-time winner more than 80% of the time, and wait times of 12+ hours were correct over 90% of the time.

Clicks with wait times of just 1 hour correctly chose the all-time winner 80% of the time, and wait times of 3+ hours were correct over 90% of the time. Even though clicks happen after opens, using clicks as the winning metric can more quickly home in on the winner.

Revenue takes the longest to determine a winner, which might not be surprising. Opens, of course, happen first. Some of those opens will convert to clicks—and some of the people who click will end up buying. But, it pays to be patient. You’ll need to wait 12 hours to correctly choose the winning campaign 80% of the time. For 90% accuracy, it’s best to let the test run for an entire day.

A quick recap

So, what are the key takeaways from this data? When you’re running A/B tests, it’s important to:

- Pick a winner based on the metric that matches your desired outcome.

- Remember that clicks and opens aren’t a substitute for revenue.

- Be patient. Letting your tests run long enough will help you be more confident that you’re choosing the right winner. We recommend waiting at least 2 hours to determine a winner based on opens, 1 hour to determine a winner based on clicks, and 12 hours to determine a winner based on revenue.

Keep in mind that while this data is a great starting point, our insights are drawn from a large, diverse user base and could differ from the results you’ll see in your own account.

Each list is unique, so set up your own A/B tests and experiment with different metrics and durations to help determine which yields the best (and most accurate) results for your business.

And if the size of your list or segment doesn’t allow for our recommended 5,000 subscribers in each combination, consider testing your entire list and use the campaign results to inform future campaign content decisions.

Original article written by Ben >

- Sale

Connect 365/7/24 Hourly Support

Original price was: $120.00.$99.00Current price is: $99.00. - Sale

Connect Auto-Pilot for WordPress Content Management

Original price was: $599.00.$499.00Current price is: $499.00. - Sale

%22%20transform%3D%22translate(1.8%201.8)%20scale(3.63281)%22%20fill-opacity%3D%22.5%22%3E%3Cpath%20fill%3D%22%23c2ffee%22%20d%3D%22M204.4%20130.6l1.2%2072-158%202.8-1.2-72z%22%2F%3E%3Cellipse%20fill%3D%22%23c4ffef%22%20cx%3D%22122%22%20cy%3D%2272%22%20rx%3D%2283%22%20ry%3D%2235%22%2F%3E%3Cellipse%20fill%3D%22%23beffea%22%20cx%3D%2265%22%20cy%3D%22126%22%20rx%3D%2235%22%20ry%3D%2256%22%2F%3E%3Cellipse%20fill%3D%22%2388ccb5%22%20rx%3D%221%22%20ry%3D%221%22%20transform%3D%22matrix(51.92988%20-137.38378%2059.61857%2022.5353%20255%20209.3)%22%2F%3E%3C%2Fg%3E%3C%2Fsvg%3E) Select options This product has multiple variants. The options may be chosen on the product page

Select options This product has multiple variants. The options may be chosen on the product pageConnect WordPress Maintenance Plans

$99.00 – $224.00